Are you trying to write a quiz of interesting trivia questions? I was too, so decided to enlist the help of ChatGPT (any similar AI tool would have worked just as well) to write my questions, create plausible (but wrong) answers for multiple choice questions and add pictures as needed. Here are the prompts I used and the strategry I followed (I'm hoping someone else will find this useful).

Before you continue reading the rest of this blog it may be worth trying the quiz first (no log-on or account needed, I promise).

Generating the Questions - First Pass

So I started by asking ChatGPT to generate interesting trivia questions:

"Please come up with 18 questions for an online quiz. The target audience should be educated people working in office jobs in the UK, usually with computers. Choose quirky and interesting trivia questions which wouldn't be asked in a normal quiz. Please divide your 18 questions into 6 groups, with 3 questions in each:

Technology

Science and Nature

Arts and Entertainment

Geography

History

Current affairs

For each set of 3 questions include one fairly easy one, one middling one and one fairly hard one. Present your answers as a table with 4 columns: Category, Level, Question and Answer."

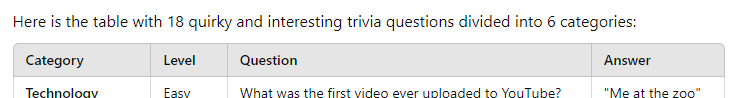

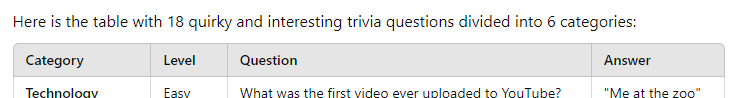

This generated a good set of questions, some of which I used:

The start of the initial list of questions.

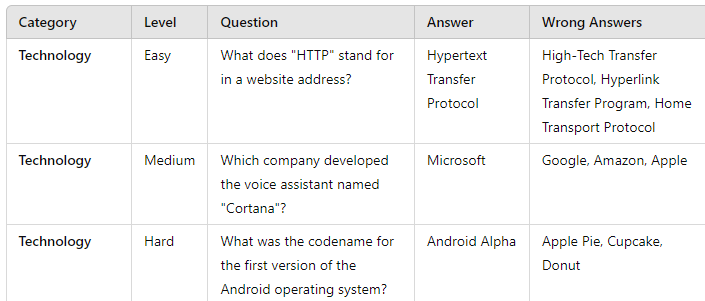

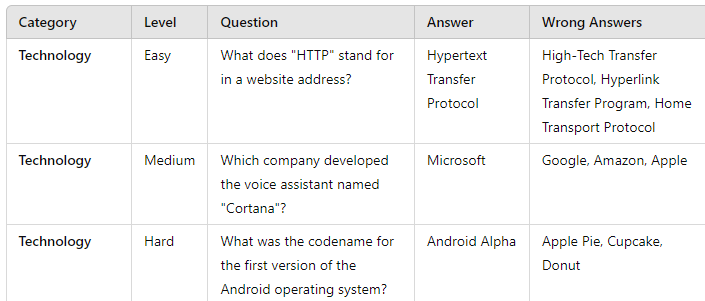

I decided I needed more questions - and some plausible (but wrong) answers, as our quiz format requires each question to have a picture and 4 possible answers, of which (obviously) only one is correct:

"Please try again with 18 new questions. Include a 5th column called "Wrong Answers" giving 3 plausible but wrong answers (I'm doing a multiple choice quiz)."

The answers weren't that good this time I felt:

I didn't use any of these questions, as I felt they were too ... obvious.

You can keep trying to get different results (I did):

"Please try 18 completely new questions."

There's a concept called AI called iterating until you revert, which basically says that you can only keep tweaking a question so many times before you'll end up with answers worse than the ones you started with. I felt ChatGPT needed a bit of guidance:

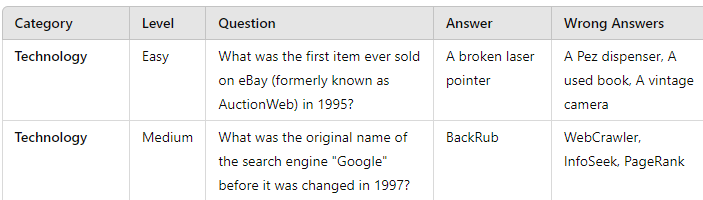

"Please try again, but go for less obvious questions, with more interesting answers - particularly about interesting bits of trivia or facts."

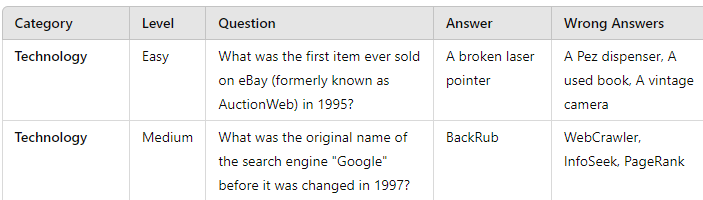

This was much better: I used the first 2 questions shown below:

I used the questions, but not the wrong answers.

I needed a few more ideas (and thought I'd give ChatGPT a bit of encouragement that it was on the right lines):

"These were better - please try 18 more."

Generating the Questions - Second Pass

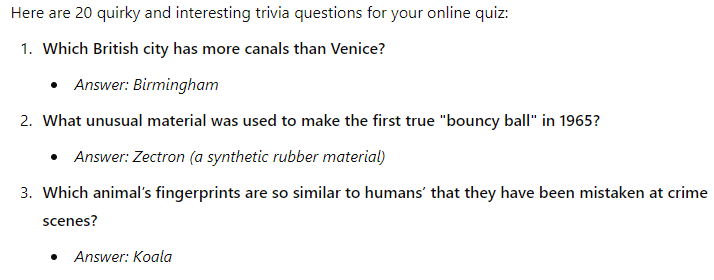

I wanted the quiz to be entertaining - and not predictable - and so still had plenty more slots to fill. I decided to start a new conversation so that ChatGPT could start afresh:

"Please come up with 20 questions for an online quiz. The target audience should be educated adults aged from 20 to 50 in the UK. Please suggest quirky and interesting trivia questions which wouldn't be asked in a normal quiz, especially if the answer is interesting and not generally known."

For the first time I was providing a target audience for our quiz (apologies to our clients who are under 20, over 50 or for whatever reason aren't educated!). This prompt gave me much better results:

Spoiler alert: I used the 3rd question.

I'd clearly hit upon the right prompt, so tried again:

"Great questions - please can I have 20 more?"

And again:

"Please can you give me 20 more?"

And again:

"Great questions - 20 more please?"

By which point I had my 20 questions! Now it was time to start checking that the answers ChatGPT gave were true, and creating 3 plausible (but wrong) answers for each question.

Had I been organised I could have collated the 20 questions for my quiz, then asked ChatGPT to provide up-to-date citations for each correct answer, ideally from Wikipedia or another independent source.

Getting plausible but wrong answers

I'll just give a couple of examples of this, which will give the idea.

ChatGPT helped me create the questions and supplied the illustrations for them, but it was its ability to generate wrong answers which really surprised me. I've found that providing bogus answers is an art form - I love the challenge of trying to come up with answers which sound more likely and convicing than the truth - but I have to admit that in ChatGPT I've met my match.

For the first one, the trivia question asked what karaoke translates to from Japanese (the answer is empty orchestra). So I tried asking this prompt:

"Karaoke means "empty orchestra". Please come up with a few plausible but wrong other possible translations of this term, some of which use humour."

I thought thatt it was important to ask for humour, as otherwise ChatGPT would generate boring, dry answers (which also then don't sound as plausible). This prompt produced:

I'm not sure I actually used any of these!

Disappointing! Undaunted, I created a new chat window and tried again:

"Karaoke translates as "empty orchestra" from Japanese. Please come up with a few plausible but wrong other possible translations for what karaoke might mean."

This worked much better:

Why did the second prompt work so much better than the first? To be honest, I'm not sure!

One more example - I wanted 3 plausbile-but-wrong suggestions for the first use of bubble wrap:

"Bubble wrap was first invented as wallpaper. Please come up with a few plausible (but wrong) possible other things it might have been invented for."

Here are the first few answers suggested:

I got ChatGPT to suggest a few more answers after the first set, and with that I had my 3 wrong answers!

Providing illustrations

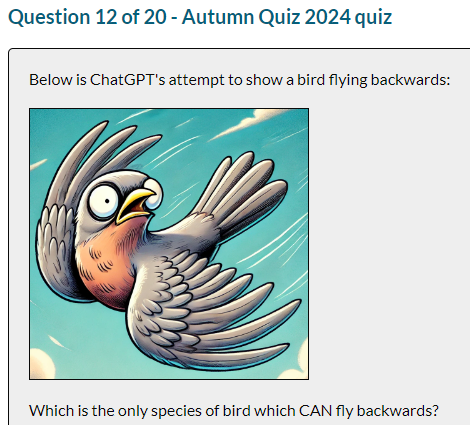

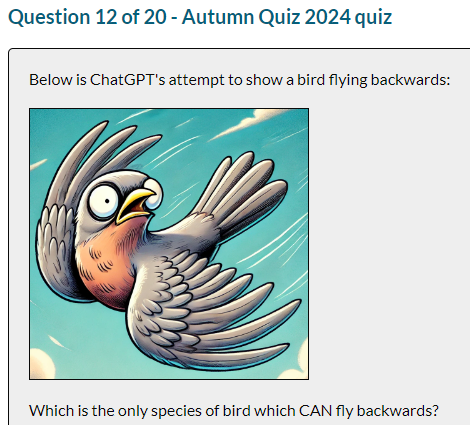

Our quiz format requires not only 4 answers (of which only one is correct), but also a picture. Getting these has proved troublesome in the past (not least because I don't want to fall foul of copyright law), but ChatGPT provides the perfect solution. For example, I wanted to illustrate this question:

I couldn't use a picture of a humming-bird actually flying backwards, since this would give away the answer!

So I tried this:

"Create a funny picture of a sparrow flying backwards."

The results were ... OK, I guess:

It's not really flying backwards, though; more sideways.

Perhaps I was too prescriiptive about the type of bird, so next I tried:

"Try again, using any common English bird. Make sure you show that the bird is flying backwards."

This worked perfectly, and produced the image I finally used!

All of the above examples - and especially the last one - illustrate an important point about using AI tools. ChatGPT has "Chat" in the name for a reason: you should be prepared to have a conversation with your AI tool, in which you gradually refine your results till you get what you wanted.

And with that, happy quizzing!